For documentation on the current version, please check Knowledge Base.

Supported Mapping Resources

This page documents all supported data types for Mobile Mapping, Aerial, and Aerial Oblique Mappin Resources.

To read more about concepts of a Mapping Run and Resource Groups, see Orbit Mapping Resource

General Notes

Required vs Optional Mapping Run resources

Mapping run is a combination of multiple individual resources that have a logical relation and are collected together by a mapping system. A mapping run is a flexible structure, not all components as listed below are mandatory.

Import Data

Orbit imports 3D mapping data using intelligent templates. An import template is configured according to a well-known set of configurations, given carrier (vehicle, UAV, aircraft, etc..) setup and available data.

The use of templates simplifies and standardizes the import procedure for a well-known set of data.

Read more and download templates here: Mapping Resource Import Templates.

Coordinate Reference Systems

Orbit supports any combination of 3D mapping data and any coordinate system (CRS) on import. But be wise:

- The coordinate reference system should be part of the metadata of each resource.

- It is advised that all georeferenced resources are using the same CRS to avoid processing time of continuous coordinate transformation. If required convert CRS on import run.

- When converting the coordinate system of image positions apply the according adjustments on all orientation angles referring to the reference direction of the coordinate systems (most probably north).

- CRS: can be expressed using any supported coordinate system.

After import, it is strongly advised that all mobile mapping resources use the same coordinate reference system. If required coordinates can be re-projected or transformed on import.

Timestamps

Optionally time-stamps can be imported. The time value can be a text string, integer, or decimal value.

String values will be displayed as-is and cannot be used for Content Manager post-processing and point cloud view restrictions.

For post-processing and point cloud view restrictions use of single time reference and “seconds” as time unit is required.

Use of Absolute (Standard) GPS Time is advised.

If Absolute GPS time values (600,000,000 < Timestamp < 3,000,000,000) are detected, Orbit will display these as “yyyy-mm-dd hh:mm:ss”1).

If GPS Week Time Seconds values (0 ⇐ Timestamp =< 604,800) are detected, Orbit will be displayed these as “d hh:mm:ss”.

Notes on Absolute GPS Time :

- Orbit doesn't take leap seconds into account, more information : Wikipedia, GPS Timekeeping and Leap seconds.

- On storing Absolute GPS Time values in Point Cloud LAS files the actual stored value is the Absolute GPS Time minus 1,000,000,000. More information, see ASPRS LASer File Format Exchange Activities.

Trajectory of Mapping System

A trajectory of the mobile mapping vehicle is optional and can be used to display the accuracy or to adjust, clip, or extract a segment of the entire mobile mapping run.

Prepare the trajectory file in the same way as the image position and orientation file: see above.

| Field | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| Timestamp | Time at recording, see “General Notes” above. | integer decimal string | optional | |

| X | X, Longitude or Easting of reference point | decimal | degrees meters | required |

| Y | Y, Latitude or Northing of reference point | |||

| Z | Z or Height of reference point | |||

| Accuracy | The Trajectory GPS Accuracy indicator is indeed interpreted as a data manager only resource. Based on the GPS Accuracy indication the data manager can do an estimated guess regarding the absolute accuracy of the data at time of recording. This indication can assist to decide if and where to measure ground control points and apply trajectory adjustments. | decimal | optional |

Imagery

Orbit supports spherical and planar images.

There are no limitations on the number of cameras, the type of camera, or the resolution of images.

One camera import requires one set of images and one position and orientation file.

Photo positions, orientations and metadata

The absolute position and orientations at the time of recording the images.

Two possibilities :

- absolute position and orientation for each camera at the time of recording

- absolute position and orientation of a reference frame at the time of recording combined with the fixed relative offset for each camera to this reference frame (aka lever arm & boresights ).

Absolute positions and orientations

Supported formats

Preferably .txt or .csv files.

But any supported point vector resource can be used.

Specifications

- One text file for each camera containing at least the below-described information for each image.

- Column headers are optional and free of choice.

- Columns and order of columns can be customized.

- Coordinates can be expressed using any supported coordinate system.

After import, it is strongly advised that all mobile mapping resources use the same coordinate reference system. If required coordinates can be re-projected or transformed on import. - Orientations can be expressed as :

- Pan (heading, yaw), Tilt (pitch), Roll 2)

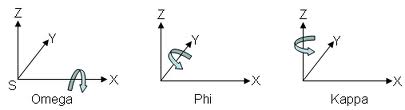

Reference direction and orientation are documented below but can be customized. - Omega, Phi, Kappa 3).

Reference direction is pointing eastwards, but can be customized. - Normalized direction and up vector (direction Easting, direction Northing, direction Elevation, up Easting, up Northing, up Elevation)

- Origin (rotation = 0°) or rotation offset and positive sense of rotation of orientation angles can be customized.

Attributes

| Field | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| Filename | Image file name as is on disk or any reference to the image file. | string | required | |

| Timestamp | Time at recording, see “General Notes” above. | string integer decimal | optional | |

| X | X, Longitude or Easting of reference point | decimal | degrees meters | required |

| Y | Y, Latitude or Northing of reference point | |||

| Z | Z or Height of reference point |

| Pan / Heading | Horizontal angle between reference frame and north. Positive for clockwise rotation of reference frame. Value 0 looking north, value 90 looking east. | decimal | degrees radians grad | required |

| Tilt / Pitch | Vertical angle or inclination about the lateral axes between reference frame and horizontal plane. Positive when reference frame goes up / looking up. Value 0 looking horizontally, value 90 looking vertically at the sky, value -90 looking down. |

|||

| Roll | Vertical angle or inclination about the longitudinal axes between reference frame and the horizontal plane. Positive when reference frame rolls to the left, right-hand turn. |

| Omega | Rotation of the reference frame about X. | decimal | degrees radians grad | |

| Phi | Rotation of the reference frame about Y | |||

| Kappa | Rotation of the reference frame about Z |

| Direction_Easting | Unit vector defining heading and pitch. By definition value range between 0-1. | decimal | undefined | |

| Direction_Northing | ||||

| Direction_elevation | ||||

| Up_Easting | Unit vector defining roll. By definition value range between 0-1. |

|||

| Up_Northing | ||||

| Up_elevation |

Relative position and orientation

Only when using an absolute position and orientation of a reference frame at time of recording, the fixed relative position and orientation for each camera to the used reference frame must be known. The relative position and orientation can be ignored when using the absolute position and orientation of images at time of recording.

| Field | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| CameraName | Unique name to identify the camera and to link these camera specifications with the position and orientation file. | string | optional | |

| CameraDeltaX | Fix distance in X expressed in meters from the origin of the camera reference frame to the IMU/GPS reference frame. | decimal | meters | optional |

| CameraDeltaY | Fix distance in Y expressed in meters from the origin of the camera reference frame to the IMU/GPS reference frame. |

|||

| CameraDeltaZ | Fix distance in Z expressed in meters from the origin of the camera reference frame to the IMU/GPS reference frame. |

| CameraDeltaPan | Fix Pan of camera reference frame to IMU/GPS reference frame. Positive for clockwise rotation of camera frame. | decimal | degrees radians grad | optional |

| CameraDeltaTilt | Fix tilt of camera reference frame to IMU/GPS reference frame. Positive when camera frame goes up / looking up. |

|||

| CameraDeltaRoll | Fix roll of camera reference frame to IMU/GPS reference frame. Positive when camera reference frame rolls to the left. |

| CameraDeltaOmega | Fix Omega of camera reference frame to IMU/GPS reference frame. | decimal | degrees radians grad | |

| CameraDeltaPhi | Fix Phi of camera reference frame to IMU/GPS reference frame. | |||

| CameraDeltaKappa | Fix Kappa of camera reference frame to IMU/GPS reference frame. |

Origin (rotation = 0°) or rotation offset and positive sense of rotation of orientation angles can be customized.

Metadata

Metadata is optional, there is no limitation on the number of added image meta-attributes on import.

- date

- timestamp

- date time

- accuracy

- GPS information

- photo group id

- …

Spherical images

Supported formats

Preferably .jpg files.

But any supported image resource can be used.

Supported types

Orbit supports any Spherical and Cubic Panorama, independently of file size or pixel resolution.

For example, Ladybug 3 and 5 are supported.

Equi-rectangular Panoramas

When working with Spherical panoramic images Orbit is using the stitched 360 x 180 degree panoramic image.

- Stitching the images should be done by the camera software, knowing and applying all camera calibration and distortion parameters.

- The centered pixel of the picture will be used to affect the orientation angles. If required this can be re-configured via a customized import.

- Any 2 x 1 pixel resolution ratio can be used but an equirectangular panoramic image should always be 360 x 180 degrees.

There are no limitations to the image pixel resolution. If required Orbit can optimize high-resolution images (> 8000 x 4000 pixels) for online use.

Cubic Panoramas

Since Orbit AIM version 10.5.1 Cubic Panoramas are supported.

When working with Cubic panoramic images Orbit is using the 6 original equirectangular images.

- To display and use cubic panoramas properly it is required to know all orientation, calibration, and distortion parameters from the used cameras.

- Any pixel resolution can be used.

Planar Images

There are no limitations on the type of camera or the resolution of images. The maximum amount of planar images is 20. One camera import requires one set of images and one position and orientation file.

Supported formats

Preferably .jpg files. But any supported image resource can be used.

Supported types

Any size, any resolution.

Preferably undistorted images, see Lens distortion.

Camera calibration

For each camera following camera and sensor specifications will be used to optimize the integration and accuracy in Orbit.

| What | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| CameraName | Unique name to identify the camera and to link these camera specifications with the position and orientation file. | string | optional | |

| SensorName | Reference of sensor to have the possibility to review the sensor specifications. | string | optional | |

| SensorPixelSize | Physical size of pixels on the sensor CCD, expressed in mm. | decimal | mm | required |

| SensorPixelCountX | Number of pixels in width of the camera sensor and image. | integer | pixels | required |

| SensorPixelCountY | Number of pixels in height of the camera sensor and image. | |||

| SensorPixelPPX | The principal point value along the sensor width, expressed in number of pixels from left lower corner. Wikipedia Focal Point | decimal | pixels | required |

| SensorPixelPPY | The principal point value along the sensor height. | |||

| FocalLength | The focal length of the lens, expressed in mm. Wikipedia Focal Length | decimal | mm | required |

Lens Distortion

The use of undistorted planar images is preferred.

When providing distorted images, lens distortion parameters must be provided corresponding to Brown implementation of OpenCV model.

| What | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| k1,k2,k3,p1,p2,fx,fy | Radial distortion parameters, regarding the formulas of D.C. Brown. Wikipedia Distortion | decimal | optional |

Street Level\Indoor

Oblique

Nadir

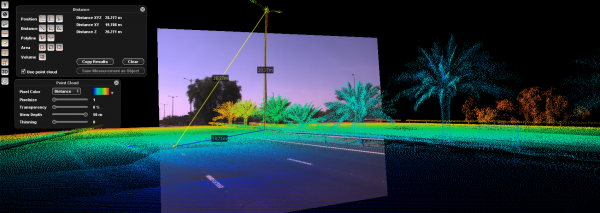

Point Cloud

LiDAR point cloud or point cloud derived from dense matching.

There are no limitations on the number of points, files, or the total size.

Required for

- Mobile Mapping: Recommended

- Oblique Mapping: Optional

- Aerial Mapping: Strongly recommended

Supported formats

- One or more supported point cloud resources, see Supported Generic Resources.

- Preferred: *.las files.

Additional Notes for ASCII text files

When using an Ascii text file as point cloud import it is possible to configure the data-structure:

- Flat ASCII text file, separated, columns and column headers

- R, G, B, I value ranges 0-255, values may be empty

- Character to define end of line

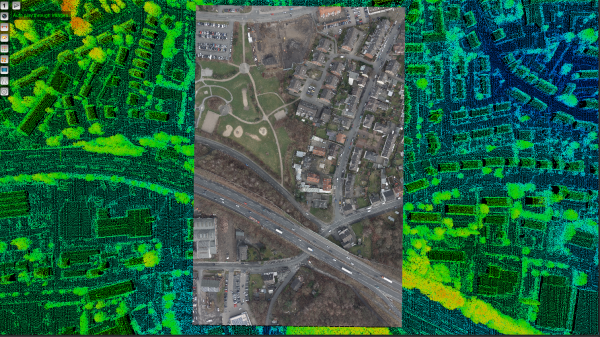

DEM

A Digital Terrain Model or Digital Surface Model covering the same area as the Mapping Resources.

Both a DTM or DSM can be used, but we recommend DTM for measurements and navigation.

Wikipedia, Digital Elevation Model.

Required for

- Mobile Mapping: Optional

- Oblique Mapping: Recommended

- Aerial Mapping: Optional

Supported formats

- One or more supported raster resources, see Supported Generic Resources.

- Preferred: single raster GeoTIFF file.

Notes

- CRS: Any supported projected coordinate system can be used.

Textured Mesh

A Textured Mesh covering the same area as the Mapping Resources can be used. It can be part of the run, but only be visualized using the Map GL component.

Use the Cesium 3D Tiles format.

Orthophoto

An Orthophoto covering the same area as the Mapping Resources can be used. Any supported multiresolution image resource can be used.

Image Annotations

Image annotation xml files are linked to the Mapping Resource images by filename.

Supported formats

One csv or xml annoation file with annotations for all imagery. Once xml annotation file per image.

Generic

<?xml version="1.0" ?> <annotation> <folder></folder> <filename>DSC02490.JPG</filename> <object> <tags>corrosion,structural_steel,light</tags> <comment></comment> <bound> <xmin>1541</xmin> <xmax>2008</xmax> <ymin>4257</ymin> <ymax>4555</ymax> </bound> </object> </annotation>

Context Insight

Version 2.0

<?xml version="1.0" encoding="utf-8"?> <ContextScene version="2.0"> <PhotoCollection> <Photos> <Photo id="0"> <ImagePath>D:/DATA/BE Lokeren Orbit - MMS by Topcon TEP/panorama1/original/LB_5000000.jpg</ImagePath> </Photo> <Photo id="1"> <ImagePath>D:/DATA/BE Lokeren Orbit - MMS by Topcon TEP/panorama1/original/LB_5000001.jpg</ImagePath> </Photos> </PhotoCollection> <Annotations> <Labels> <Label id="1"> <Name>person</Name> </Label> <Label id="3"> <Name>car</Name> </Label> <Label id="8"> <Name>truck</Name> </Label </Labels> <Objects2D> <ObjectsInPhoto> <PhotoId>0</PhotoId> <Objects> <Object2D id="0"> <LabelInfo> <Confidence>0.9734092</Confidence> <LabelId>1</LabelId> </LabelInfo> <Box2D> <xmin>0.694173574447632</xmin> <ymin>0.540982902050018</ymin> <xmax>0.717123627662659</xmax> <ymax>0.666199862957001</ymax> </Box2D> </Object2D> <Object2D id="1"> <LabelInfo> <Confidence>0.8548903</Confidence> <LabelId>3</LabelId> </LabelInfo> <Box2D> <xmin>0.00750905275344849</xmin> <ymin>0.559191405773163</ymin> <xmax>0.145229429006577</xmax> <ymax>0.784758269786835</ymax> </Box2D> </Object2D> </Objects> </ObjectsInPhoto> <ObjectsInPhoto> <PhotoId>1</PhotoId> <Objects> <Object2D id="0"> <LabelInfo> <Confidence>0.9596778</Confidence> <LabelId>3</LabelId> </LabelInfo> <Box2D> <xmin>0.00675202906131744</xmin> <ymin>0.552075982093811</ymin> <xmax>0.148410230875015</xmax> <ymax>0.789381384849548</ymax> </Box2D> </Object2D> </ObjectsInPhoto> </Objects2D> </Annotations> </ContextScene>

Version 1.0

<?xml version="1.0" encoding="utf-8"?> <Scene version="1.0"> <PositioningLevel>Unknown</PositioningLevel> <SpatialReferenceSystems/> <Devices/> <Poses/> <Shots> <Shot id="0"> <DeviceId>0</DeviceId> <PoseId>0</PoseId> <ImagePath>Z:/Movyon/POC_bridge/Carreggiata_Nord/Carreggiata_Nord/01_Spalla inizio/P5590560.JPG</ImagePath> <NearDepth>3.41018193441476</NearDepth> <MedianDepth>5.31374846082021</MedianDepth> <FarDepth>6.03807500283101</FarDepth> </Shot> <Shot id="1"> <DeviceId>0</DeviceId> <PoseId>1</PoseId> <ImagePath>Z:/Movyon/POC_bridge/Carreggiata_Nord/Carreggiata_Nord/01_Spalla inizio/P5600561.JPG</ImagePath> <NearDepth>3.35579477082049</NearDepth> <MedianDepth>5.22362535386351</MedianDepth> <FarDepth>5.86464739324289</FarDepth> </Shot> </Shots> <TiePoints/> <PointConstraints/> <Learning> <Labels> <Label> <Id>1</Id> <Name>person</Name> </Label> <Label> <Id>3</Id> <Name>car</Name> </Label> </Labels> <ObjectsInImages> <ObjectsInImage> <ImageId>0</ImageId> <Objects2D> <Object2D> <Id>0</Id> <LabelInfo> <Confidence>0.846392</Confidence> <LabelId>9</LabelId> </LabelInfo> <Box2D> <xmin>0.059297751635313</xmin> <ymin>0.145194128155708</ymin> <xmax>0.999414801597595</xmax> <ymax>0.438706368207932</ymax> </Box2D> </Object2D> </Objects2D> </ObjectsInImage> <ObjectsInImage> <ImageId>1</ImageId> <Objects2D> <Object2D> <Id>0</Id> <LabelInfo> <Confidence>0.9815791</Confidence> <LabelId>9</LabelId> </LabelInfo> <Box2D> <xmin>0.0492010489106178</xmin> <ymin>0.213278770446777</ymin> <xmax>0.978382289409637</xmax> <ymax>0.549207985401154</ymax> </Box2D> </Object2D> </Objects2D> </ObjectsInImage> </ObjectsInImages> <Objects3D/> <Segmentations2D/> <Segmentations3D/> </Learning> <PointClouds/> <Pairs/> <AutomaticTiePoints/> </Scene>

Video

Supported formats

Preferably .mp4 files.

When using cloud blob storage, the storage needs to support “range requests” to open the video at a specific time offset from start.

Attributes

| What | Description | Data type | Units | Necessity |

|---|---|---|---|---|

| VideoFileName | The URL pointing to the online storage location of the video. | string | required | |

| VideoTime | The time offset from the start of the video. | integer | milliseconds | required |

References and Geodata

Any supported reference data can be added into Orbit. Reference data is not part of a Run.